Navigation : EXPO21XX > VISION 21XX >

H15: Research and Universities

> Universitat Autònoma de Barcelona

Universitat Autònoma de Barcelona

- Offer Profile

- Welcome to the Advanced

Driver Assistance Systems research group, part of the Computer Vision Center

based in the Universitat Autònoma de Barcelona, Spain.

Our group is focused on combining computer vision techniques as pattern recognition, feature extraction, learning, tracking, 3D vision, etc. to develop real-time algorithms able to assist the driving activity.

Product Portfolio

Projects

- Intelligent vehicles refer to cars, trucks, buses etc. on which sensors and

control systems have been integrated in order to assist the driving task,

hence their name Advanced Driver Assistance Systems (ADAS).

The aim is to combine sensors and algorithms to understand the vehicle environment so that the driver can receive assistance or be warned of potential hazards. Vision is the most important sense employed for driving, therefore cameras are the most used sensor in these systems.

In this context, our group is focused on combining computer vision techniques as pattern recognition, feature extraction, learning, tracking, 3D vision, etc. to develop real-time algorithms able to assist the driving activity. Examples of assistance applications are: Lane Departure Warning, Collision Warning, Automatic Cruise Control, Pedestrian Protection, Headlights Control, etc.

The group was founded in year 2002, and it is leaded by Dr Antonio M. López. The group is composed by nine doctors and and thirteen PhD students. Currently the group is supported by: Spanish Ministry of Education and Science (MEC) Research Project TRA2011-29454-C03-00.

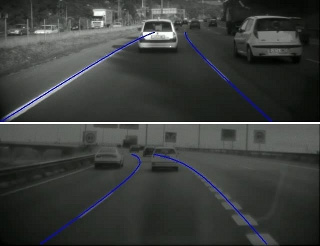

Lanemakings

- Detection of lane markings based on a camera sensor can

be a low cost solution to lane departure and curve over speed warning. A

number of methods and implementations have been reported in the literature.

However, reliable detection is still an issue due to cast shadows, wearied

and occluded markings, variable ambient lighting conditions etc.

We focus on increasing the reliability of detection and at the same time compute relevant road geometric parameters like curvature, lane witdh and vehicle position within its lane. Video sequences are processed frame by frame, that is, no temporal coherence or continuity has been enforced. Our method is able to run at least at 40 ms.

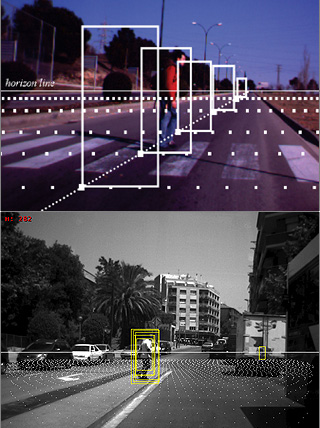

Pedestrians

- Pedestrian accidents are the second source of traffic

injuries and fatalities in the European Union. In this sense, advanced

driver assistance systems (ADAS), and specifically pedestrian protection

systems (PPS), have become an important field of research to improve traffic

safety. Of course, in order to avoid collisions with pedestrians they must

be detected, being camera sensors key due to the rich amount of cues and

high resolution they provide.

Currently there are two main lines of work, one based on images of the visible spectrum, and the other, mainly motivated by nighttime, based on thermal infrared. The former has accumulated more literature because the easier availability of either CCD or CMOS sensors working in the visible spectrum, their cheaper price, better signal-to-noise ratio and resolution, and because most of the accidents happen at daytime. At the moment, we work with this visible spectrum images.

In this context, difficulties of the pedestrian detection task for PPS arise both from working with a mobile platform in an outdoor scenario, recurrent challenge in all ADAS applications, and from dealing with a so aspect-changing class like pedestrians. Difficulties can be summarized in the followings:- targets have a very high intra-class variability (e.g., clothes, illumination, distance, size, etc.);

- background can be cluttered and change in milliseconds;

- targets and camera usually follow different unknown movements;

- and fast system reaction together with a very robust response is required.

Video Matching

- Video synchronization of two sequences can be stated as

the alignment of their temporal dimension, that is, finding a (discrete)

mapping c(t1) = t2 between the time (frame number) in the first video to

time in the second video, such that the frame of the first video at time t1

best matches its corresponding frame, at time c(t1), in the second video.

The final goal is the comparison of videos: find out what's different on a pair of videos shot by moving platorm which has followed more or less similar trajectories. This is possible because once two videos are synchronized and under certain assumptions, corresponding frame pairs can be spatially registered. Therefore, the two videos can be compared pixel-wise.

We are exploring two possible applications of video comparison: outdoor surveillance at night and the comparison of vehicle headlights systems:- Outdoor surveillance at night

- Comparison of vehicle headlights systems

- Road segmentation

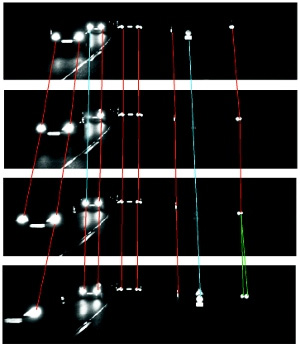

Headlights comparsion

- Headlights are an important active safety element of

vehicles. As such, they are continuously improved by the automotive

component manufacturers. This poses the problem of how to assess them. An

important step in the evaluation of a headlights system consists in the

actual experience of driving at night along a test track while observing

their behaviour with regard features like the lateral and longitudinal reach

of the low beams and the homogeneity of the light cast on the road surface.

Dynamic headlights assessment is usually relative, that is, the performance of two headlights are compared. This comparison could be greatly facilitated by recording videos of what the driver sees, with a camera attached to the windshield screen. The problem now becomes how to compare a pair of such sequences. Two issues must be addressed: the temporal alignment or synchronization of the two sequences, and then the spatial alignment or registration of all the corresponding frames. We propose a semiautomatic but fast procedure for the former, and an automatic method for the later.

In addition, we explore an alternative to the joint visualization of corresponding frames called the bird's view transform, and propose a simple fusion technique for a better visualization of the headlights differences in two sequences.

Intelligent Headlights

- Intelligent vehicle lighting systems aim at automatically

regulate the headlights' beam angle so as to illuminate as much of the road

ahead as possible, while avoiding dazzling other drivers. A key component of

such a system is a computer vision software able to distinguish blobs due to

vehicles head and rear-lights from those originating from road lamps and

reflective elements like poles and traffic signs.

We have devised a set of specialized supervised classifiers to make such decisions based on blob features related to its intensity and shape. Based on a training set of more than 60.000 blobs (!) we do distinguish quite well headlights, tail lights, poles and traffic sings.

Despite the overall good performance, there remain challenging cases not yet solved which hamper the adoption of such a system; notably, faint and tiny blobs corresponding to quite distant vehicles which disapear and reappear now and then. One reason for the errors in the classification is that it was carried out independently of other frames. Hence, we address the problem by tracking blobs in order to 1) obtain more feature measurements per blob along its track, 2) compute motion features, which we deem relevant for the classification and 3) enforce its temporal consistency. Our tracking method deals with blob occlusions and also splitting and mergings.